Big Brother Is Watching

Rahul K. Shah, MD, George Washington University School of Medicine, Children’s National Medical Center, Washington, DC I have often chatted with fellow physicians and Academy members about the massive amount of data that is being accumulated on our practice patterns from not only our own hospitals and payers (private and the government), but from sources such as electronic medical records and even companies that track patient satisfaction scores. As big data becomes more manageable in the digital revolution, the ability to synthesize through hundreds of thousands of records becomes expected and the norm, rather than an anomaly. The major concern is then, what comes of this big data and how is it synthesized and analyzed before it is presented? A major player in the electronic medical records industry, Practice Fusion®, recently came under fire from practices that used their free platform as there were concerns about the company’s intent to aggregate big data, parcel the data, and sell it for analysis (for example, macro level data on thousands of patients with diabetes could be invaluable for a pharmaceutical company). There must be similar concern about what is going to happen to the practice of medicine under the extreme scrutiny of the untrained, emotional eye of the observer. For example, what if the big data demonstrates that in my practice region of the greater Washington, DC, area I am an outlier (this is hypothetical of course) for complications? What if the data demonstrated that I had a higher-than-average right-sided post-tonsil bleed rate? Remember, with big data the data can be analyzed and twisted and turned in myriad fashions. So, let us play out the scenario above. What are my patients going to do when they find out that my right-sided post-tonsillectomy bleed rate is higher than my peers in our practice region? Furthermore, what is the hospital going to do about credentialing my focused practice-performance evaluations and my privileges? Will my right-tonsillectomy privileges be rescinded? What if all of my patients who bled in the preceding time period had bleeding diatheses that were neither captured nor reported by those that aggregate our patient data (i.e., lack of risk adjustment)? This issue is in distinction to doctor’s review sites that are public sites where people blog about their physicians and can give them rankings, such as ZocDoc.com. These sites provide more granular level data that one can argue is actionable. However, the big data being collected about our practice patterns and outcomes is different because it is provided to agencies from hospital administrative staff, and often lacks actionable data or granularity. There can be issues with attribution and coding of cases, which can affect the macro level trend data. So What Are We To Do? There are many options, but the two that resonate the most with me is to first, understand the major data reporting repositories so you understand their methodology and how they report out data. Understanding how the data is collected and what it means can help you explain to your patients why the hospital you operate at is below the national benchmarks for specific case types and various other indicators (hospitalcompare.hhs.gov). Once you know where your data and your hospital’s data are being reported, you can speak with the administrative individual at your local hospital that is providing the data to ensure proper and complete case capture. Finally, we must own our patient’s outcomes and data. There are now data registries where one can sign up through national organizations, such as the AmericanCollege of Surgeons. If we can be stewards of our own data, then we can ensure that it is risk-adjusted, accurate, and reflects our true practice patterns. On a personal level, this article is even more pertinent, because my real “big brother” is also a pediatric otolaryngologist at Nemours in Wilmington, DE. He can watch his younger brother anytime and pretty soon—with the way that data transparency is coming along, he will not even have to watch me—he can go to the Internet and check my metrics to ensure that I am causing no harm! We encourage members to write us with any topic of interest and we will try to research and discuss the issue. Members’ names are published only after they have been contacted directly by Academy staff and have given consent to the use of their names. Please email the Academy at qualityimprovement@entnet.org to engage us in a patient safety and quality discussion that is pertinent to your practice.

Rahul K. Shah, MD, George Washington University School of Medicine, Children’s National Medical Center, Washington, DC

A major player in the electronic medical records industry, Practice Fusion®, recently came under fire from practices that used their free platform as there were concerns about the company’s intent to aggregate big data, parcel the data, and sell it for analysis (for example, macro level data on thousands of patients with diabetes could be invaluable for a pharmaceutical company).

There must be similar concern about what is going to happen to the practice of medicine under the extreme scrutiny of the untrained, emotional eye of the observer. For example, what if the big data demonstrates that in my practice region of the greater Washington, DC, area I am an outlier (this is hypothetical of course) for complications? What if the data demonstrated that I had a higher-than-average right-sided post-tonsil bleed rate? Remember, with big data the data can be analyzed and twisted and turned in myriad fashions. So, let us play out the scenario above. What are my patients going to do when they find out that my right-sided post-tonsillectomy bleed rate is higher than my peers in our practice region? Furthermore, what is the hospital going to do about credentialing my focused practice-performance evaluations and my privileges? Will my right-tonsillectomy privileges be rescinded? What if all of my patients who bled in the preceding time period had bleeding diatheses that were neither captured nor reported by those that aggregate our patient data (i.e., lack of risk adjustment)?

This issue is in distinction to doctor’s review sites that are public sites where people blog about their physicians and can give them rankings, such as ZocDoc.com. These sites provide more granular level data that one can argue is actionable. However, the big data being collected about our practice patterns and outcomes is different because it is provided to agencies from hospital administrative staff, and often lacks actionable data or granularity. There can be issues with attribution and coding of cases, which can affect the macro level trend data.

So What Are We To Do?

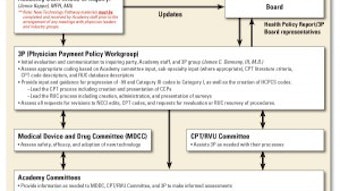

There are many options, but the two that resonate the most with me is to first, understand the major data reporting repositories so you understand their methodology and how they report out data. Understanding how the data is collected and what it means can help you explain to your patients why the hospital you operate at is below the national benchmarks for specific case types and various other indicators (hospitalcompare.hhs.gov). Once you know where your data and your hospital’s data are being reported, you can speak with the administrative individual at your local hospital that is providing the data to ensure proper and complete case capture.

Finally, we must own our patient’s outcomes and data. There are now data registries where one can sign up through national organizations, such as the AmericanCollege of Surgeons. If we can be stewards of our own data, then we can ensure that it is risk-adjusted, accurate, and reflects our true practice patterns.

On a personal level, this article is even more pertinent, because my real “big brother” is also a pediatric otolaryngologist at Nemours in Wilmington, DE. He can watch his younger brother anytime and pretty soon—with the way that data transparency is coming along, he will not even have to watch me—he can go to the Internet and check my metrics to ensure that I am causing no harm!

We encourage members to write us with any topic of interest and we will try to research and discuss the issue. Members’ names are published only after they have been contacted directly by Academy staff and have given consent to the use of their names. Please email the Academy at qualityimprovement@entnet.org to engage us in a patient safety and quality discussion that is pertinent to your practice.