AI in ENT: What You Should Know

Having a good understanding of the benefits, risks, and various intangible case details of AI will help us do better by our patients.

Brian D’Anza, MD, and N. Scott Howard, MD, Medical Informatics Committee members

How Do We Define AI?

AI is defined by the National Artificial Intelligence Act of 2020 as, “A machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments.”1 But can we break it down a little more practically?

Cal Al-Dhubaib, PhD, founder and AI strategist at Pandata, an AI-focused health tech company says, “I describe AI as software that does two things: recognize patterns and react to them.” Dr. Al-Dhubaib continues, “These patterns can be simple spreadsheets or complex medical images, clinical notes, or all of the above.”

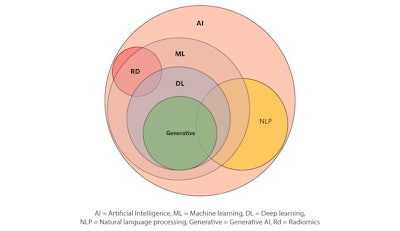

Reacting to patterns is a familiar concept for most physicians, but computers can often do this faster and, in some circumstances, better than humans by seeing new patterns that could have potentially been missed. However, these AI-powered tools have also been shown to produce authoritative yet probably untrue responses that can be concerning. In order to understand how AI works, you need to understand some of its basic features, such as how it is being employed and categorized. Some of the confusion by physicians is due to the unusual naming of various categories of the technology. These names specify the type of AI work being done. See Figure 1 for an overview of classification strategies and how these areas interact.

Figure 1. AI classification strategies and how these areas interact.

Figure 1. AI classification strategies and how these areas interact.

Let’s start from the circle labeled ML: The term “machine learning” (ML) is used to describe areas of AI that involve a computer system that is able to “learn” from large datasets to improve its performance in a task over time.2 Think of it as a self-learning process that can ingest a lot of data and spit out more accurate results. Different ML processes use algorithms in various shapes and sizes to make this work. This can range from typical statistical models such as logistical regression, all the way to deep learning algorithms, known as neural networks. Deep learning (DL) neural networks rely on these vast amounts of data to drive capabilities such as image and speech recognition, natural language processing, and generative AI. Generative AI includes well known technology such as ChatGPT or DALL-E to create new content such as text, images, music, or video using algorithms that study existing data and compare it to the new product.

Practical Applications in Otolaryngology

There are some practical applications of AI that are already important to the otolaryngologist. The first is the use of natural language processing (NLP). This is essentially what your phone or transcription technology already does—taking the acoustic energy of your voice and translating it to phonetic words and phrases. As otolaryngologists we are already studying ways to extend these abilities beyond syntax to understand pathological issues with voice quality, speech disorders, or improving speech understanding in noise for patients with cochlear implants.3

NLP can also evaluate unstructured data in our notes. This represents a huge opportunity to improve our data categorization and utilization in disparate areas and through different means (e.g., your recording of the vitals in your note versus the vitals posted by the monitor) into a single source. This can also be applied more broadly to publicly available datasets. One study combined NLP to evaluate a subject all physicians surely love—identifying patterns of patient satisfaction ratings among otolaryngologists.4 Within this study, a NLP sentiment analysis was used to review websites to determine if there were particular demographic factors that resulted in “better” or “worse” ratings.

Another area of popular interest when it comes to AI is the use of radiomics and its ability to aid medical image-based analysis and predictions. Radiomics is a branch of AI that extracts large volumes of image features and correlates that with known clinical details to develop predictive models that can be used on additional images.5 The basic radiomics workflow can be broken down into distinct steps that involves image acquisition, uploading images and clinical data of interest into the AI system, and then developing models for predicting outcomes. One such example would be using thousands of images in a hospital data repository that identify neck masses and then correlating that with HPV status.6,7 Much work is already being done in this space. Although radiomics in many cases is not yet available for directing patient care decisions, it may be coming soon.

Risks

There are many articles in popular media that define AI and the potential use cases of what it can and should do. A key part of understanding its utility is also identifying its shortcomings. One big concern is the potential for inherent biases in the data. As we learned above, AI is merely reacting to patterns in the data; it does not always understand the larger picture. So, if a dataset fed to the system includes mostly 65-year-old Caucasian males with the clinical details associated with vertigo, the AI model resulting from that dataset is not going to provide great predictions on how to treat a 19-year-old Hispanic woman with dizziness. The take-home point is that AI is not a panacea for diagnosis and data analysis without understanding what goes in. It is critical to know the purpose of the algorithms, and there must be transparency in the type of data used to train the AI’s decisioning.

What AI Is Not

Although it is important to define what AI is, it is also critical to define what it is not. AI is not a replacement for physicians, now or in the future. Nearly all AI currently available is focused on improving the quality, efficiency, or care delivered by healthcare professionals. The distinctly personal human-to-human connection is such a critical part of healthcare. Medicine requires both a scientific process and an art of compassion to build a relationship with a patient. AI cannot replicate the personal touch of a doctor-patient connection. Instead of replicating doctors, AI will serve as another critical tool that must be understood, learned, and used in appropriate degrees in different practices. As otolaryngologists, we will be faced with many choices of adopting AI iterations as critical tools in our armamentarium of patient care. Having a clear view of the benefits, risks, and various intangible case details will help us do better by our patients.

References

- United States Department of State. Artificial Intelligence and Society. https://www.state.gov/artificial-intelligence/ Accessed 10/25/23.

- Au Yeung J, Wang YY, Kraljevic Z, Teo JTH. Artificial intelligence (AI) for neurologists: do digital neurones dream of electric sheep? Pract Neurol. 2023 Nov 23;23(6):476-488. doi: 10.1136/pn-2023-003757. PMID: 37977806.

- Tama BA, Kim DH, Kim G, Kim SW, Lee S. Recent Advances in the Application of Artificial Intelligence in Otorhinolaryngology-Head and Neck Surgery. Clin Exp Otorhinolaryngol. 2020 Nov;13(4):326-339. doi: 10.21053/ceo.2020.00654. Epub 2020 Jun 18. PMID: 32631041; PMCID: PMC7669308.

- Vasan V, Cheng CP, Lerner DK, Vujovic D, van Gerwen M, Iloreta AM. A natural language processing approach to uncover patterns among online ratings of otolaryngologists. J Laryngol Otol. 2023 Dec;137(12):1384-1388. doi: 10.1017/S0022215123000476. Epub 2023 Mar 20. PMID: 36938802.

- Maleki F, Le WT, Sananmuang T, Kadoury S, Forghani R. Machine Learning Applications for Head and Neck Imaging. Neuroimaging Clin N Am. 2020 Nov;30(4):517-529. doi: 10.1016/j.nic.2020.08.003. PMID: 33039001.

- Buch K, Fujita A, Li B, Kawashima Y, Qureshi MM, Sakai O. Using Texture Analysis to Determine Human Papillomavirus Status of Oropharyngeal Squamous Cell Carcinomas on CT. AJNR Am J Neuroradiol. 2015 Jul;36(7):1343-8. doi: 10.3174/ajnr.A4285. Epub 2015 Apr 2. PMID: 25836725; PMCID: PMC7965289.

- Parmar C, Grossmann P, Rietveld D, Rietbergen MM, Lambin P, Aerts HJ. Radiomic Machine-Learning Classifiers for Prognostic Biomarkers of Head and Neck Cancer. Front Oncol. 2015 Dec 3;5:272. doi: 10.3389/fonc.2015.00272. PMID: 26697407; PMCID: PMC4668290.